by Lizzy Baus

Quick Summary

Linked Data Cataloging Workflows is the sixth and final webinar in the From MARC to BIBFRAME series, presented by Xiaoli Li, and offered by the Association for Library Collections & Technical Services (ALCTS). The presenter covered the following topics: Background on the BIBFLOW project, cataloging workflows with BIBFRAME, and skills and training.

Linked Data Cataloging Workflows is the sixth and final webinar in the From MARC to BIBFRAME series, presented by Xiaoli Li, and offered by the Association for Library Collections & Technical Services (ALCTS). Since each session in the series builds off the one before it, consider reading the other articles we have summarized before reading this article. Links to previous articles are under "Related Content" on the right side of this page.

Linked Data Cataloging Workflows covered the following topics:

- Background: BIBFLOW Project

- Cataloging workflows with BIBFRAME

- Copy cataloging

- Original cataloging

- Creation of authority and minting URI

- Skills and training

BACKGROUND: BIBFLOW PROJECT

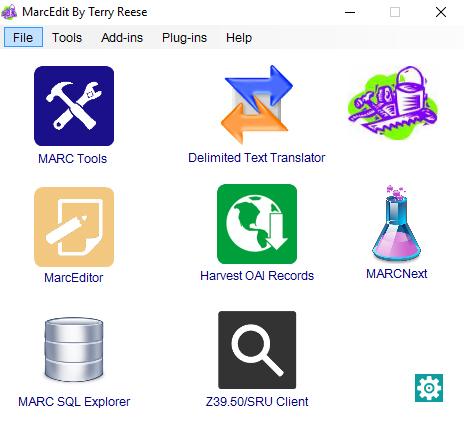

Xiaoli Li begins by providing some background on the BIBFLOW project. This was a 2-year research partnership between Zepheira and University of California Davis which aimed to assess the impact of BIBFRAME on academic library technical services workflows. Their purpose was "to understand ecosystem, test solutions, and provide a roadmap of how libraries can iteratively migrate to linked data without disrupting patron or business services." She goes on to explain in some detail the current "ecosystem", with coexisting MARC and BIBFRAME/Linked Data information (see title image). Li breaks the diagram down into separate interconnected data pathways - ways to get ready for linked data - and discussed each in turn.

Pathway #1, dealing with MARC records only, moves from OCLC through a MARC URI tool and to the library's ILS. Previous sessions in this series (see Highlights from ALCTS webinar: Embedding URIs in MARC using MarcEdit; Highlights from ALCTS webinar: How to get from here to BIBFRAME) addressed the how-tos of this pathway, so Li does not dwell on them here.

Pathway #2 focused on Linked Data only. Making up the top right corner of the full diagram, this pathway is somewhat aspirational. That is, the main center block appears with a question mark; the infrastructure (something analagous to the WorldCat or SkyRiver database) does not yet exist at scale.

Pathway #3 combines both these pathways and adds a few more programs to make up the whole diagram seen above. This is the hybrid ecosystem that bridges the gap between our current reliance on MARC and the desire to take advantage of the benefits offered by Linked Data. The complexity of this combined pathway allows for a slower, smoother transition; there is room for overlap between the two worlds while staff becomes more comfortable and confident with new systems.

For the rest of the presentation, Li focuses on the cataloging-related portion of the roadmap, comprised of the Linked Data Editor, OCLC, and Authorities boxes in the lower right corner.

CATALOGING WORKFLOWS WITH BIBFRAME

Li discusses two main tools for cataloging in BIBFRAME: Library of Congress' BIBFRAME Editor, and UC Davis' BIBFRAME Scribe.

One very large advantage of LC's BIBFRAME Editor is that it does not require the cataloger to know the BIBFRAME data model inside and out: it is already laid out for the user. This tool also features direct links to the RDA Toolkit for each field, allowing catalogers to concentrate on describing the resource in hand rather than how to encode and store the data.

BIBFRAME Scribe is one of the products that came out of the BIBFLOW project, and it is somewhat of a prototype; it is not yet fully functional for production. The interface of this tool is not as tied to cataloging rules as the BIBFRAME Editor; this is because its creators want to make sure it remains useful to a wide range of audiences.

Li demonstrates a possible workflow for copy cataloging using the BIBFRAME Scribe tool. Beginning with a search by ISBN, the tool reaches out to several external sources to find existing records. When one is found and selected, Scribe will automatically populate several fields in the new local record (including, for instance, control numbers). All that is then required is for the cataloger to enter a local call number and export the record.

The original cataloging workflow Li demonstrates uses the BIBFRAME Editor, which makes significant use of lookup services. One caveat for exploration of this tool: the BIBFRAME Editor is still using the BIBFRAME vocabulary version 1.0, while version 2.0 was released earlier this year. The BIBFRAME Editor offers a straightforward interface of buttons and auto-suggest search boxes to help the cataloger select, for example, the correct Library of Congress Name Authority File record for the author. Although the user sees only the text labels for these records, the Editor tool pulls the URI from the external service and stores that in the description being created. The same sort of interface also allows the cataloger to look up subjects, places, and so on. As the cataloger moves through the levels of the BIBFRAME data model, the Editor connects the pieces with URIs for each level of description.

One of the areas where cataloging will change the most, and which will require the most work to set up infrastructure, is the creation of authorities linked to URIs. In BIBFRAME Editor, a cataloger can type in a new access point if no URI is found for a given person/place/subject as a placeholder, but it will not be connected to any URI. BIBFRAME Scribe has a more robust way to deal with such a situation: the user can enter as much information as available (such as birth and death dates, alternative names, various subdivisions for subjects, and so on). When this description is saved, BIBFRAME Scribe will automatically mint a new URI for that concept.

The creation of new authorities and URIs begs the question of whether it is time for libraries to stop restricting ourselves to our own databases (such as the LCNAF) and start trusting other data sources (such as ORCID or ISNI). Li also asks if libraries should use authority data from libraries in other countries.

Another issue is that the linked data version of the Library of Congress Subject Headings is incomplete at best, with over 5 million known concepts unrepresented by URIs. Li asks whether, in a Linked Data environment, it is still worthwhile to create and use pre-coordinated subject strings.

After raising these points, Li describes how the catalog data created relates to all the other parts of the roadmap diagram, serving human discovery as well as internal library operations.

SKILLS AND TRAINING

In discussing what skills will be needed for the near future, Li asserts that catalogers and metadata practitioners already have all of the skills necessary to create linked data using good tools. The skills she mentions include understanding of cataloging rules, good judgement, and the ability to analyze relationships and make connections. Although it is nice to know about the theoretical underpinnings and data models, Li stresses that catalogers will not need to be experts in linked data, though a basic understanding of the concept and related terms will make life easier. Policymakers, on the other hand, should have a good understanding of the application of linked data technology, which may include some more specialized skills such as knowledge of data modeling, APIs, SPARQL, and so on. Above all, Li says, both practitioners and policymakers much be flexible and positive, curious, and willing to learn.

As for training, Li points to a very useful report published by the Program for Cooperative Cataloging Standing Committee on Training, which details available linked data training resources. She draws attention especially to the training provided by the Library of Congress and the Linked Data for Professional Education project.

Li closes the presentation with thanks and a call to keep learning and experimenting.